article

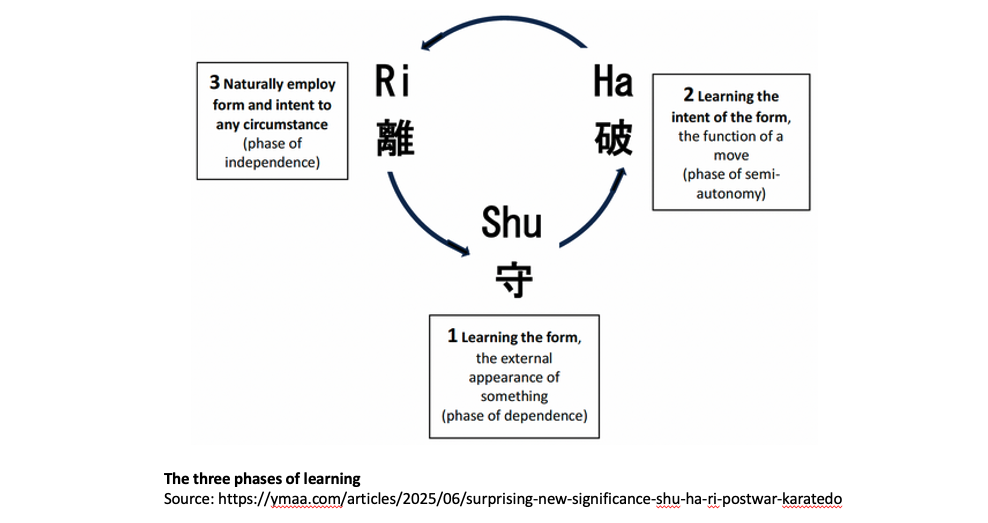

- Shu (imitation),

- Ha (innovation), and

- Ri (detachment or transcendence).

-

Fitts & Posner’s Three-Stage Model describes progression from the Cognitive stage, where learners consciously think through each movement. It then moves to the Associative stage of refinement and error reduction. Finally, it reaches the Autonomous stage where skills become largely unconscious.

-

Adams’s Two-Stage Model offers a simpler linear progression from the Verbal-Motor stage to the Motor stage, where performance becomes increasingly automatic.

-

The Dreyfus model proposes that learners progress through five levels: novice, advanced beginner, competent, proficient, and expert.

-

Perhaps most influential is Scaffolding and Fading, rooted in Lev Vygotsky’s theory of the proximal zone of development. This approach deliberately simplifies complex skills into manageable components, with teachers providing extensive initial support before gradually removing assistance.

-

He’s the creator of the Tinderbox notemaking app. ↩︎

- A video interview with the author.

- A summary of the argument, adapted from the introduction: The Surprising New Significance of Shu Ha Ri in Postwar Karatedo.

- A 1983 BBC documentary about Okinawan karate: The Way of the Warrior: Karate, the Way of the Empty Hand. This is extraordinary and a real classic! (mentioned in a footnote on p.97).

AI is not helping the learning process

💬 “When teachers rely on commonly used artificial intelligence chatbots to devise lesson plans, it does not result in more engaging, immersive or effective learning experiences compared with existing techniques”

See also:

Civic Education in the Age of AI: Should We Trust AI-Generated Lesson Plans? | CITE Journal

I’m the author of Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters, available now.

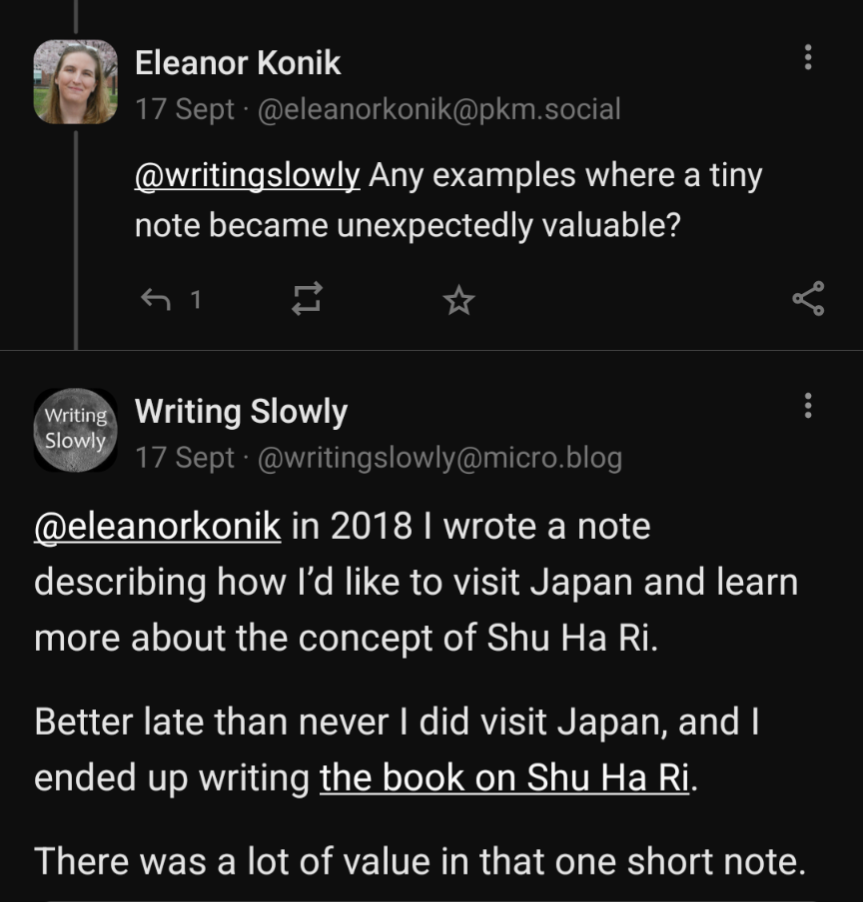

What’s your most valuable note?

@eleanorkonik@pkm.social asked:

“Any examples where a tiny note became unexpectedly valuable?”

Here’s my reply.

In 2018 I wrote a note describing how I’d like to visit Japan and learn more about the concept of Shu Ha Ri.

Better late than never I did visit Japan, and I ended up writing the book on Shu Ha Ri.

There was a lot of value in that one short note.

Create your own mental models

When he was still in high school my cousin took to pulling old cars apart, completely, then putting them back together. This was a real learning experience, and the beginning of an entire career working with motor vehicles. François Chollet, author of Deep Learning with Python, said:

💬 To really understand a concept, you have to “invent” it yourself in some capacity. Understanding doesn’t come from passive content consumption. It is always self-built. It is an active, high-agency, self-directed process of creating and debugging your own mental models. - as quoted by Simon Willison.

This is what I’m doing with my collection of working notes, my Zettelkasten. I disassemble ideas and concepts, de-contextualise them, and reassemble them into new arrangements under quite different circumstances. From fragments you can build a greater whole.

Sometimes ‘invention’ is mashing together two or more existing ideas in new and unexpected ways. But sometimes it’s simply rebuilding an existing idea from the ground up, to create something previously unimaginable.

I wrote my book about the Japanese concept of learning, Shu Ha Ri, because in the fifteen years since I first encountered this concept, no one else had written a clear introduction. It’s quite literally the book I wanted to read for myself. Well, I certainly didn’t invent the idea, but in writing the definitive introduction I’ve certainly taken it apart, examined it from every angle, worked out how to explain it to others, and put it back together.

On Friday I received a nice text from a martial arts instructor, who’d been handed the book by someone else:

💬 I absolutely loved it. First time in a long time I immediately reread a book.

And so I hope you’ll enjoy giving the book a test drive too.

Photo by Geoff Charles, 1962. National Library of Wales. Llyfrgell Genedlaethol Cymru / The National Library of Wales on Unsplash

Photo by Geoff Charles, 1962. National Library of Wales. Llyfrgell Genedlaethol Cymru / The National Library of Wales on Unsplash

Check out my book, Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters. And you can also subscribe to the weekly Writing Slowly email newsletter.

Provocative words about learning, teaching, AI, and the timely value of history

Do you like links? Here’s what I’ve come across on the Web lately: provocative words about learning, teaching, AI, and the timely value of history.

💬 “What A.I. can’t do is feel the shape of silence after someone says something so honest we forget we’re here to learn. What it can’t do is pause mid-sentence because it remembered the smell of its father’s old chair. What it can’t do is sit in a room full of people who are trying—and failing—to make sense of something that maybe can’t be made sense of. That’s the job of teaching.” — Sean Cho A. on teaching college during the rise of AI The Rumpus.

💬 “When human inquiry and creativity are offloaded to anthropomorphic AI bots, there is a risk of devaluing critical thinking while promoting cognitive offloading. If we turn the intellectual development of the next generation over to opaque, probabilistic engines trained on a slurry of scraped content, with little transparency and even less accountability, we are not enhancing education; we are commodifying it, corporatizing it, and replacing pedagogy with productivity.” — Courtney C. Radsch, We should all be Luddites • Brookings.

💬 “While the school says its students test in the top 1% on standardized assessments, AI models have been met with skepticism by educators who say they’re unproven.” — The $40,000 a year school where AI shapes every lesson, without teachers. CBS News. Wikipedia: Alpha School. I’ll revisit this in a few years to see just how hard it crashed (or not).

💬 “As our lives become more enmeshed with technological devices, services, and processes, I think that awareness is something which we the technology-wielding should strive for if we want to build a properly humane and empathic world.” — Matthew Lyon, The Fourth Quadrant of Knowledge • lyonheart.

💬 “Knowledge of history and awareness of history can allow us to see patterns, make connections, and identify incipient problems. It can give us a language and a set of references which allows us to step back, broaden our view, and see things and sometimes warn ourselves and others when necessary.” — Timothy Snyder on Stalin and Stephen Miller.

I’m the author of Shu Ha Ri. The Japanese Way of Learning, for Artists and Fighters, available now.. And for all the crunchy, fresh Writing Slowly goodness you can sign up to the weekly digest. It’s exactly like a bunch of radishes, but made out of email.

Publishing means no more hiding

Revelation must be terrible, knowing you can never hide your voice again. – David Whyte

Publishing my book, I had the strange feeling of having crossed an invisible but very powerful threshold.

It was while signing copies at a small and very supportive gathering, that it dawned on me that the thoughts that used to be just in my head are now public and exposed to the world – and since I’ve lodged this work in every State Library in Australia, they’ll never again be totally private.

I had thought I just wanted to publish my words, to release my book into the wild, as it were, to allow it to find its readers.

So it never occurred that I might have been benefiting in some way from the obscurity of the drafting process.

Not that I want to hide my voice – far from it.

Nor that I’m expecting a million readers. Again, far from it.

But the knowledge that I now have one unique reader – you – with whom my words will perhaps connect whether I bid them or not, well that changes things somehow.

And it’s certainly a revelation to realise there’s no going back.

My book, Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters, is out now. Please check it out.

Curious about Hypercuriosity

One reason I make notes and write is that I’m curious about everything.

I’ve written previously about how to be interested in everything. And I’ve also written about busybodies, hunters and dancers - three different styles of curiosity.

It was the ‘dancer’ style of curiosity that resonated most with me:

“This type of curiosity is described as a dance in which disparate concepts, typically conceived of as unrelated, are briefly linked in unique ways as the curious individual leaps and bounds across traditionally siloed areas of knowledge. Such brief linking fosters the generation or creation of new experiences, ideas, and thoughts.”

So I was interested to see that Anne-Laure Le Cunff, author of Tiny Experiments and founder of Ness Labs, Has been exploring what she calls ‘hypercuriosity’, which may be associated with ADHD.

Well, I guess I’m the living proof. I set out this evening to write about my book, Shu Ha Ri: The Japanese Way of Learning for Artists and Fighters but I ended up writing about something completely different instead: hypercuriosity.

Come to think of it, that’s how the book got written in the first place, by pursuing my curiosity. And come to think of it, that’s how I do practically everything.

In writing the book I was particularly attracted by the value placed on the Japanese concept of shoshin (初心), ‘beginner’s mind’ - a quality often downplayed in Western contexts, where experts are supposed to already know everything.

I’m more interested in not knowing - and then going to great lengths to find out.

Links:

Brar, G. (2024, November 14). The hypercuriosity theory of ADHD: An interview with Anne-Laure Le Cunff. Evolution and Psychiatry (Substack).

Gupta, S. (2025, September 16). People with ADHD may have an underappreciated advantage: Hypercuriosity. Science News.

Le Cunff, A. (2024). Distractability and impulsivity in ADHD as an evolutionary mismatch of high trait curiosity. Evolutionary Psychological Science, 10, 282.

Le Cunff, A. (2025, July 15). When curiosity doesn’t fit the world we’ve built: How do we design a world that supports hypercurious minds? Ness Labs.

If you’re curious to catch the latest Writing Slowly action, please subscribe to the weekly email digest. All the posts, delivered straight to your in-box.

Japanese Shu Ha Ri: Is it Better Than Western Learning Methods?

I’m the author of Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters, available now.

The way we approach learning fundamentally shapes how deeply we can master a skill. In the West, we’ve largely embraced linear progression. We move methodically from theoretical understanding to practical application. And the dominant image of learning is that of a ladder or a pyramid which the learner climbs step by step to reach the top. Yet there exists an alternative philosophy that challenges this conventional wisdom. It’s the Japanese concept of Shu Ha Ri. It’s not better, perhaps, but I’ve found it different in interesting and fruitful ways. Interesting enough to write a short introduction to the concept, since no one else had done so.

Western learning models, certainly those I grew up with, characteristically begin with cognitive frameworks before advancing to hands-on practice. Students typically start with rules and theories before attempting simplified components. Only then do they attempt the full complexity of their chosen discipline. In contrast, Shu Ha Ri represents a cyclical process. It moves through three distinct phases:

This isn’t so much a ladder, a one-way journey, as a circle, or better, a repeated spiral, in which experts don’t stop learning but return to the basics and understand them anew.

While Western models serve their purpose in structured environments, the Shu Ha Ri approach offers crucial insights for achieving true mastery, particularly in disciplines that demand intuitive understanding rather than merely intellectual comprehension.

How Western Linear Learning Actually Works

Western psychology has produced several influential models that support linear skill acquisition.

All these models assume that effective learning requires moving from simple, understood components toward complex, integrated performance. Obviously this isn’t wrong. But this linear progression may inadvertently create barriers to the deep, intuitive knowledge that characterizes genuine expertise.

Why Shu Ha Ri Creates Deeper Mastery

Western models excel at creating competent practitioners, but they may limit the development of true mastery. By prioritizing theoretical understanding and simplified components, these approaches can prevent learners from accessing the profound depths that Shu Ha Ri makes possible.

An important aspect of learning risks being overlooked - the way in which students often learn best from observing and imitating practitioners in action. As psychologist Albert Bandura observed, learning is fundamentally a social activity.

Does Starting With the “Whole” Beat the “Simplified”?

Western scaffolding deliberately fragments skills into digestible pieces. A violin student might spend considerable time on bow hold before attempting a simple melody, or a chef might practice knife cuts in isolation before approaching actual recipes. Yet this reductionist approach, though logical, can prevent learners from experiencing the skill’s true essence.

Shu Ha Ri takes a radically different approach. In the Shu stage, students engage immediately with the complete, unsimplified form. Recently I visited the Japanese city of Matsumoto, which is where music educator Shinichi Suzuki (1898-1998) lived and worked. I remembered first encountering the Suzuki method of music education years previously, and marveling at how very young children were encouraged to play complete pieces of music and to be immersed in musical culture from a very young age. A student of the Japanese tea ceremony doesn’t begin with broken-down movements or theoretical principles. They observe and attempt to replicate an entire ritual (known as temae), albeit simplified, from their very first lesson. And this immersion in the “whole” allows learners to absorb subtle relationships between components that might be lost in fragmented approaches.

Why Imitation Surpasses Cognition

Western educational models place considerable emphasis on cognitive understanding before physical practice. Shu Ha Ri fundamentally inverts this priority. The Shu stage prioritizes imitation and embodied practice while deliberately minimizing cognitive load.

This is somewhat consistent with Albert Bandura’s presentation of observational learning, and the idea that we learn best not in isolation, but socially, by observing and imitating effective practitioners.

Students are encouraged to copy their master’s movements and timing without initially concerning themselves with underlying principles. And this allows “embodied cognition” to develop naturally through physical practice rather than intellectual analysis.

A jazz musician learning through traditional Western methods might spend considerable time studying music theory and chord progressions before improvising. But the Shu Ha Ri approach would emphasize extensive listening and playing along with masters. This allows the musician to develop intuitive understanding of rhythm and phrasing, along with harmonic relationships and timing that cannot be fully captured in theory books. This was in fact very close to the approach of Clark Terry (1920-2015), legendary jazz trumpeter and educator, who proposed:

“imitation, assimilation, and then innovation”.

Can Learning Be Cyclical Rather Than Linear?

Western models typically imply completion. They suggest reaching a final “autonomous” stage where learning essentially concludes. Newly minted experts risk being led to believe they have somehow finished their education. Perhaps we have to keep talking about ‘lifelong learning’ because otherwise we might forget to do it. But Shu Ha Ri presents a fundamentally different philosophy. Rather than linear progression toward completion, it describes a cyclical journey of continuous refinement.

After achieving mastery (Ri), practitioners commonly return to foundational practices (Shu) with deeper understanding. They uncover subtleties previously invisible to them. So a master calligrapher might return to basic brush strokes after decades of practice. By returning to their ‘beginner’s mind’ they may find new and previously unrecognised depths in movements they’ve performed thousands of times. This cyclical concept suggests that true mastery isn’t a destination. It’s an ongoing process of deepening understanding.

Which Path Actually Leads to Mastery?

Western learning models possess considerable strengths, particularly in academic settings where clear progression markers are essential. These models prove invaluable for complex technical skills where safety and precision demand systematic understanding. Medical training, engineering education, and scientific research all benefit from structured, theoretical foundations.

However, when our goal extends beyond competency to genuine mastery, Shu Ha Ri offers a complimentary framework. This is particularly true in disciplines requiring intuitive understanding or creative expression. And the traditional Japanese approach recognizes that true mastery involves more than accumulated knowledge or perfected technique.

Shu Ha Ri encompasses a quality of understanding that emerges through sustained practice and cyclical refinement. It prioritizes deep immersion in complete forms and wholeness over fragmented components. Linear models efficiently create capable practitioners. But the cyclical and holistic philosophy of Shu Ha Ri nurtures the lifelong pursuit of true mastery. Its imitation-based approach and emphasis on complete forms creates deeper understanding than fragmented learning.

We’re increasingly focused on rapid skill acquisition and short cuts to expertise. Yet this ancient wisdom reminds us that the deepest forms of human expertise can’t be rushed or simplified. They must be lived, embodied, and continually refined through patient, cyclical practice.

Read more in Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters.

And did you know you can sign up to the Writing Slowly weekly email digest?

References

Adams, J. A. (1971). A closed-loop theory of motor learning. Journal of Motor Behavior, 3(2), 111-150.

Bandura, A. (1962). Social Learning through Imitation. University of Nebraska Press: Lincoln, NE.

Bradić, S., Kariya, C., Callan, M., & Jones, L. (2023). Universality and applicability of shu-ha-ri concept through comparison in everyday life, education, judo and kata in judo. The Arts and Sciences of Judo (ASJ) Vol. 03 No. 02.

Dreyfus S, Dreyfus H. (1980). A five stage model of the mental activities involved in directed skill acquisition. California University Berkeley Operations Research Center. Accessed at www.dtic.mil/dtic/inde…

Fitts, P. M., & Posner, M. I. (1967). Human performance. Brooks/Cole.

Freimann, R. (nd) An Interview with Clark Terry. banddirector.com. Accessed at https://banddirector.com/interviews/an-interview-with-clark-terry-by-rachel-freiman/

Hammerness, K., Darling-Hammond, L., & Bransford, J. (2005). How teachers learn and develop. In L. Darling-Hammond & J. Bransford (Eds.), Preparing teachers for a changing world: What teachers should learn and be able to do (pp. 358-389). Jossey-Bass.

Magill, R. A., & Anderson, D. I. (2017). Motor learning and control: Concepts and applications (11th ed.). McGraw-Hill Education.

Peña A. (2010). The Dreyfus model of clinical problem-solving skills acquisition: a critical perspective. Medical education online, 15, 10.3402/meo.v15i0.4846. Accessed at doi.org/10.3402/m….

Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Harvard University Press.

Wood, D., Bruner, J. S., & Ross, G. (1976). The role of tutoring in problem solving. Journal of Child Psychology and Psychiatry, 17(2), 89-100.

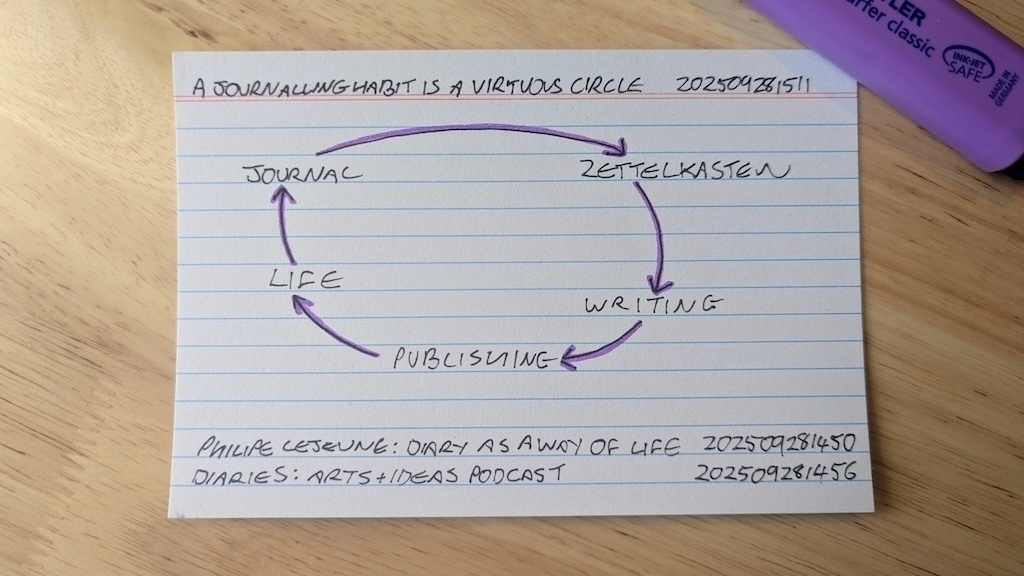

Keeping a diary is a way of living

“A diary is not only a text: it is a behaviour, a way of life, of which the text is a by-product" - French theorist Philipe Lejeune. Source: Arts & Ideas Podcast.

Exactly so. I have a journalling habit, which fuels my Zettelkasten, (my collection of linked notes), which in turn fuels my writing. This in turn affects my life, which I journal about. It’s a virtuous circle.

I’m the author of Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters.

And did you know you can sign up to the Writing Slowly weekly email digest?

Zettelkasten podcast episodes

Here are a couple of podcast interviews where the Zettelkasten approach to making notes is discussed in detail. Enjoy!

William Wadsworth (Exam Study Expert) interviews Sonke Ahrens, author of How to Take Smart Notes. Apple Podcasts.

Sönke Ahrens on Niklas Luhmann’s writing process:

“The main part of the writing process happened in this in-between space most people, I believe, neglect. They write notes, they read, they polish their manuscripts, but I think few people understand the importance of taking proper notes and organising them in a way that a manuscript, an argument, a chapter can evolve out of that.”

Jackson Dahl (Dialectic) interviews Billy Oppenheimer, Ryan Holiday’s research assistant, on staying attuned for clues. Apple Podcasts.

“I adopted/adapted Ryan Holiday’s notecard system, which he learned from Robert Greene. And it’s just literally boxes of 4x6 notecards. I’ve never seen Robert’s actual cards, but I have seen Ryan’s. His are filled with shorthands: a maybe a phrase, a word, or a single sentence that conveys a story from some book. They are little reminders capturing the broad strokes of something. You notate it with the book and page number so you can go back and find the specific details.”

“Niklas Luhmann also has another great idea about making notes for an ignorant stranger… Because that’s what you are when you come back to it. We think, “There’s no way I’m going to forget this story.” You come back to it, and it’s highlighted and underlined. You’re like, “What was I loving about this?” I try to make the note cards for an ignorant stranger. You should be able to pick one up and have enough context to make out what this thing is. And so in a similar way, in the margins of books, I try to do that for myself.”

An atomic note isn’t just about ideas; it’s about time. Start smaller, stop sooner, and your notes become easier to reuse and connect. ✍️ Post here: The shortest writing session that could possibly work.

#NoteTaking #KnowledgeWork #zettelkasten #writingtips

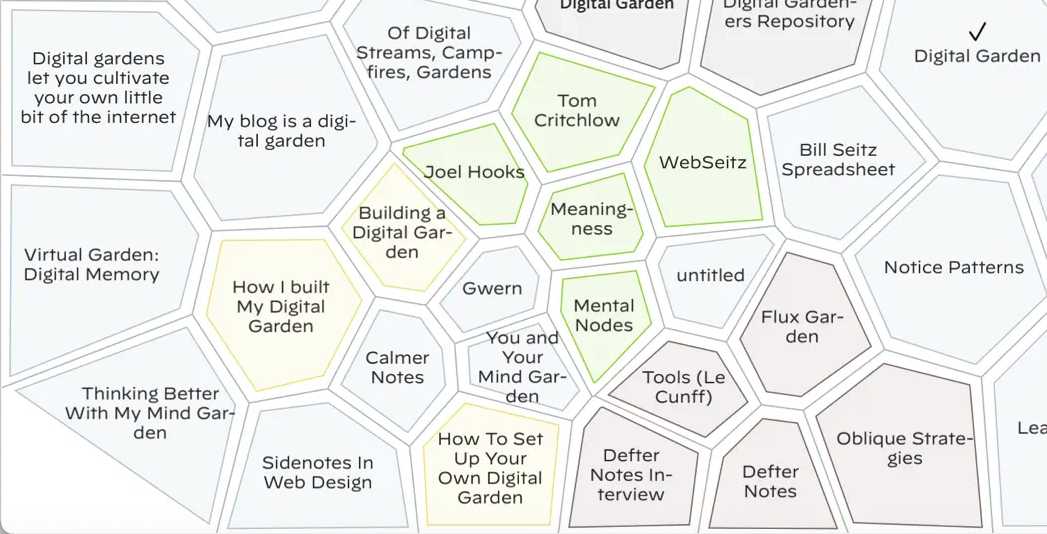

Back to the Information City? How knowledge visualisation shapes the journey

I was intrigued by Mark Bernstein’s1 co-authored article revisiting the concept of the city as a visual metaphor for information in the era of hypertext.

Intrigued, because I’m not convinced the city makes things clearer. In fact the first thing that came into my mind was Steven Marcus’s claim from way back that urban dwellers experience a particular kind of estrangement. They sense that “the city is unintelligible and illegible”. This appears in a collection of essays on the Victorian city, in an essay titled ‘Reading the Illegible.’ (1973:257).

This idea - that the city can’t be read - put me in mind of Jonathan Raban’s proto-postmodernist book Soft City (1974), where he contrasts the book with the city, the legible with the illegible.

“The city and the book are opposed forms: to force the city’s spread, contingency, and aimless motion into the tight progression of a narrative is to risk a total falsehood. There is no single point of view from which we can grasp the city as a whole. That indeed is the distinction between the city and the small town. A good working definition of metropolitan life would center on its intrinsic illegibility. (p. 219)

As it happens, it seems that the article authors' conclusion is that the Information city is not a particularly promising metaphor to guide the navigation of complex information structures:

“It seems clear that the Information City is better suited to constructive than to exploratory hypertext.”

This ties in nicely with my take on anthropologist Tim Ingold’s view that creativity is more about ‘itineration’ (wayfinding) than ‘iteration’ (making an object).

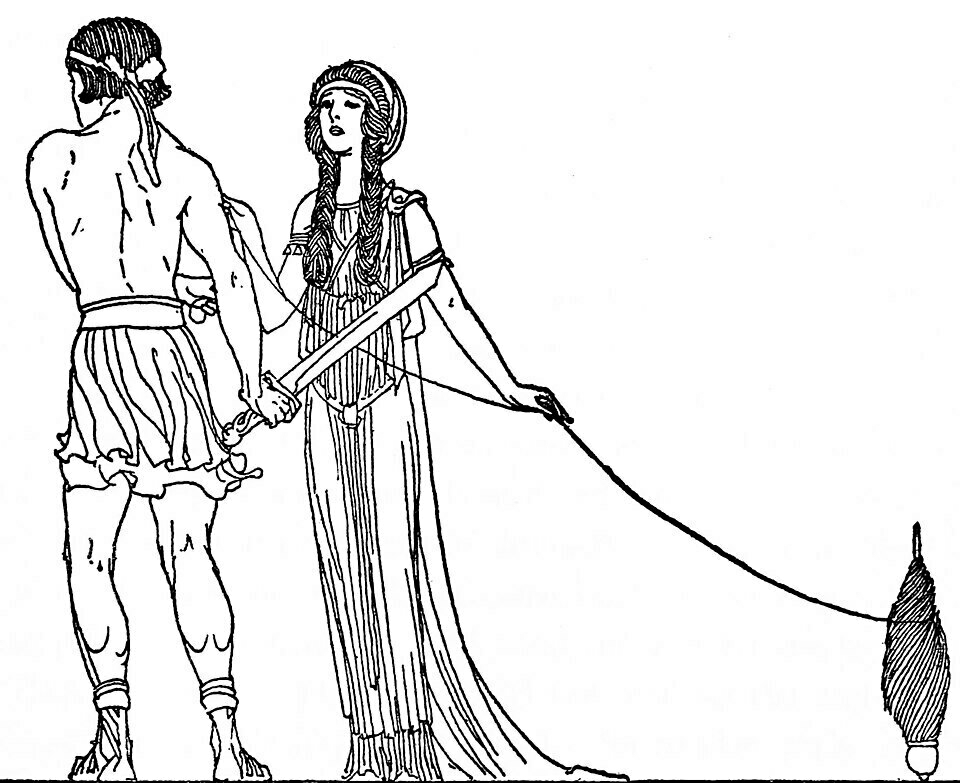

Would it be possible, then, somehow to depict the wayfinding process in and of itself without in advance also reifying the landscape? I’m imagining a walk through an unfamiliar place, which through repetition gradually becomes familiar, and may be rendered yet more familiar by establishing idiosyncratic markers, the way Ariadne’s thread guided Theseus through the Minotaur’s labyrinth.

*[Image source]: Internet Archive Book Images, No restrictions, via Wikimedia Commons.*

*[Image source]: Internet Archive Book Images, No restrictions, via Wikimedia Commons.*

The authors say of their attempted information visualisation:

“The Information City may be superb for some and intolerable for those who might prefer to work in a Piranesi dungeon.”

My view is that despite the efforts of UI creators, we don’t really have a choice in this matter. We are already living in Piranesi’s dungeon, in which meaning is lost and found and lost again, and where forgetting is as important as remembering.

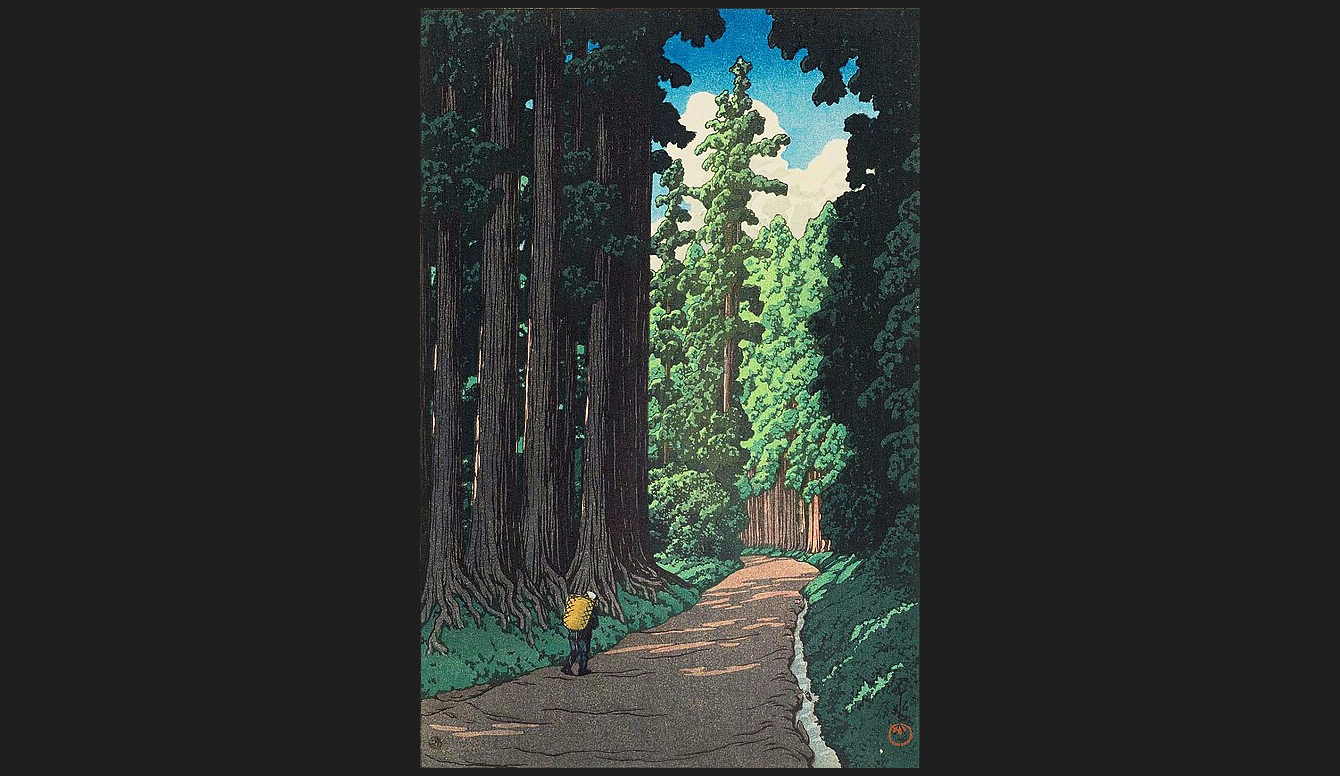

That said, I’m as wary of the dream of information legibility as I am of the dream of urban legibility. The metaphor that works for me is of an immense and unknown forest, the deep forest of accumulated knowledge. Though travelers may have no sense of the ultimate extent of the forest, and even if there is no thread, they can make one as they explore. They may still provide a report, like a travel journal:

“Here is the route I took, and here are the landmarks I discovered on the way.".

This deep subjectivity allows for a limited form of objectivity:

“with my report in hand you too can follow this path through the trees.".

*[Image source: Hasui Kawase], Public domain, via Wikimedia Commons.*

*[Image source: Hasui Kawase], Public domain, via Wikimedia Commons.*

References:

Bernstein, Mark, Silas Hooper, and Mark Anderson. “Back to the Information City.” Paper presented at HT 2025: 36th ACM Conference on Hypertext and Social Media, Chicago, IL, September 15–18, 2025. https://doi.org/10.1145/3720553.3746664.

Dyos, H. J., and Michael Wolff. The Victorian City: Images and Realities. Vol. 1. London: Routledge & Kegan Paul, 1973.

Ingold, Tim. “The Textility of Making.” Cambridge Journal of Economics 34 (2010): 91–102. https://doi.org/10.1093/cje/bep042.

Raban, Jonathan. Soft City: The Art of Cosmopolitan Living. New York: E.P. Dutton, 1974.

Use case for the Zettelkasten

Why use a Zettelkasten? Why indeed? Geeky online legend Gwern was rather negative:

Most people simply have no need for lots of half-formed ideas, random lists of research papers, and so on. This is what people always miss about “Zettelkasten”: are you writing a book? Are you a historian or Teutonic scholar like Niklas Luhmann? Do you publish a dozen papers a year? Are you the 1% of the 1%? No? Then why do you think you need a Zettelkasten?

He argued that tools for thought don’t actually aid thought and that the obviously useful alternative is ‘systems that think for the user instead’.

Wait, what? Systems that think for the user?? I disagree with this very strongly. Sure, it’s true that as they stand, ‘tools for thought’ are no substitute for humans putting in the effort. But I don’t see that as a valid criticism.

The human effort is the part that matters. The effort of thought is actually a feature not a bug. Human thought is preferable to AI computation not because it’s more efficient (although it very often is) but because it’s more human, and humans warm to the activity of other humans.

For instance, in early 2025 a Lithuanian explorer attempted to cross the Pacific Ocean in a one-man rowing boat. He made it to within 740km of the Australian coast, when he was assailed by a cyclone and prevented from sleeping for several days straight. In extremis he finally set off his SOS beacon and the Australian navy came to rescue him, despite the 16-metre-high swells they had to brave. The adventurer only just made it out alive. Returned from the dead, back on shore and reunited with this wife in a photogenic moment he sank to his knees as she embraced him.

Now this was all very interesting, despite the fact that the very ocean water that was trying to capsize him routinely crosses vast distances with no problem. No one cares about the brine, no one feels for its plight and the media never report on its travails. Did you ever see a headline like this:

“Alone and exhausted, a desperate ocean wave makes it gratefully to shore”?

No you didn’t. That was a rhetorical question. Human interest stories work because it’s humans that we’re interested in.

*Won't someone think of the poor wave?*

*Won't someone think of the poor wave?*

Improbably, this wasn’t the only Lithuanian paddler to survive a run-in with Australian waters in recent years. The previous year a Lithuanian kayaker slipped into some rapids on Tasmania’s Franklin River, where he was jammed between rocks and pinned down by a flow of 13 tonnes of water per second. Again, he was the subject of a daring and extreme rescue. Meanwhile, no one thought twice about how the water felt.

And no one cares either when it’s AI that’s supposedly doing the ‘thinking’. It’s inanimate. But they do care quite a lot about a solitary Lithuanian in mortal danger. And so on.

I’ve never visited the Franklin River, which this photograph I took in New Zealand clearly illustrates.

I’ve never visited the Franklin River, which this photograph I took in New Zealand clearly illustrates.

Getting back on track, the thought that goes into making notes matters, quite simply because thought just does matter. Conversely, when ‘systems think for the user’, well, whatever that is, it’s not thought.

But beyond this, I can’t help wondering why we need to justify at all a practice so basic as simply making notes and linking them. My half-formed thoughts might not be as good as Gwern’s (OK, they definitely aren’t), but at least they’re my half-formed thoughts.

In a co-authored conference paper, Mark Bernstein, creator of the Tinderbox app, makes what ought to be an obvious point:

“It may frequently be the case that we ourselves do not know the ultimate uses of our notes, yet still find note-taking rewarding.” - Bernstein et al., 2025, Back to the Information City

Reflecting on Gwern’s dismissal of the Zettelkasten approach, I’m reminded of self-help guru Oliver Burkeman, who had a different criticism to offer. He said he had tried a Zettelkasten but found it too organised. That got me wondering, how much mess is just enough?

For what it’s worth, I’ve found the Zettelkasten approach very practical and quite productive, despite my not being particularly organised. Here’s a book it helped me write and publish: Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters.

And of course, my Zettelkasten is helping me to carry on Writing Slowly. You can follow the frenetic action with the weekly digest - a blog magically transformed into an email. Amazing!

See also: From tiny drops of writing great rivers will flow.

Reference:

Bernstein, Mark, Silas Hooper, and Mark Anderson. ‘Back to the Information City’. Paper presented at HT 2025: 36th ACM Conference on Hypertext and Social Media, September 15–18, 2025, Chicago, IL, USA. 2025. Preprint PDF.

#Zettelkasten #PKMS #notetaking #toolsforthought #Lithuanian #HT2025

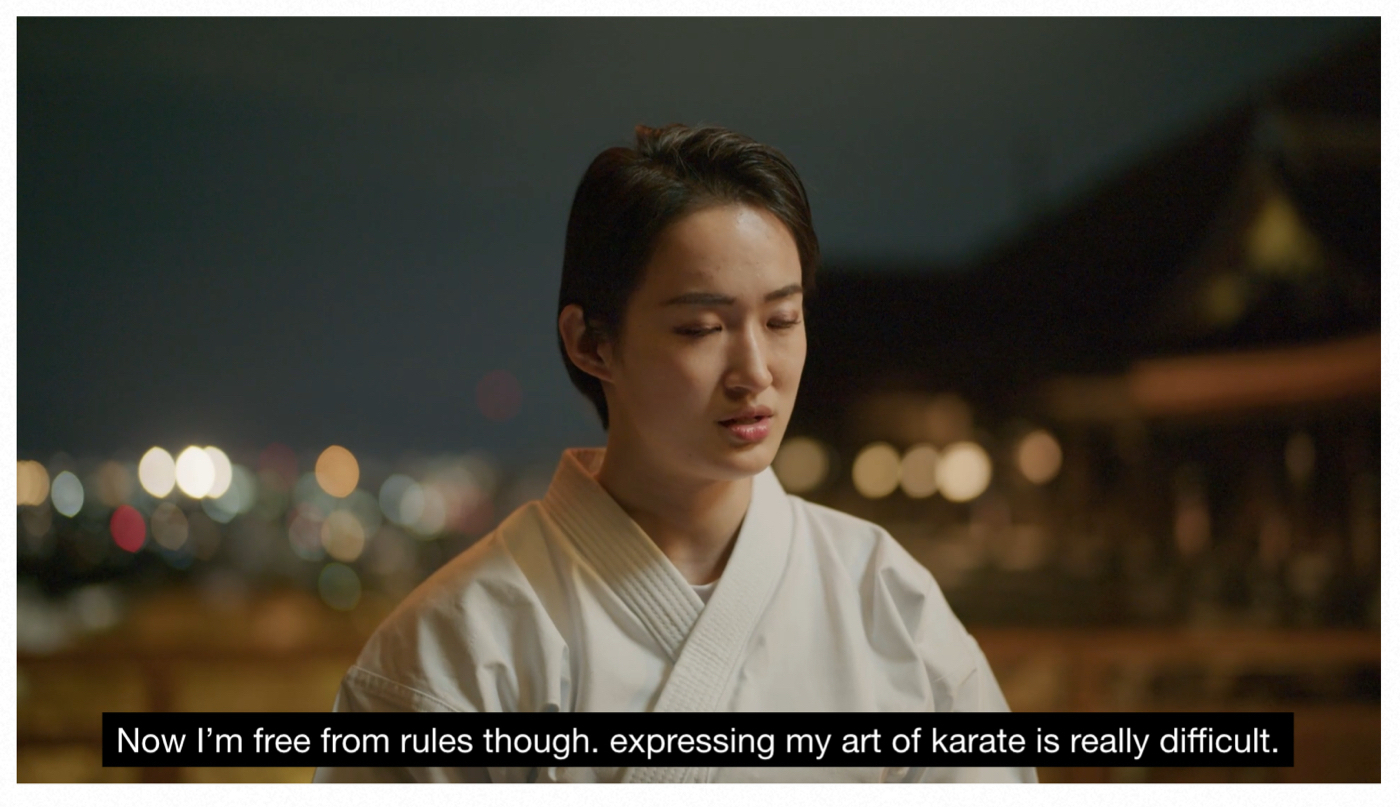

What does it mean to transcend the rules?

A martial arts dedication performed by Japanese karate practitioner Kiyou Shimizu at Kiyomizu-dera Temple, Kyoto.

This former world champion has retired from competitive karate, and is finding new ways to express her mastery of the discipline.

Watching this dedication reminded me of some words of the kabuke actor Nakamura Kanzaburo XVIII:

“You break the mold because there is a mold, and if there is no mold, you have no form."1

I’m the author of Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters. It’s a short and accessible introduction to the concept, available now.

And if you liked this article, why not subscribe to the weekly Writing Slowly email digest?

#Shuhari #martialarts #karate #kyoto #kiyomizudera

Is there a Zettelkasten method?

Quite a few people write and speak about the Zettelkasten, a simple way of maintaining a note making system, but is there really any such thing? Some reflections on the seemingly obsolete practice of writing notes on small slips of paper and arranging them so they can be found again.

Mastering Any Skill, the Japanese Way

📚 A review of Analysis of Shu Ha Ri in Karate-Do: When a Martial Art Becomes a Fine Art by Hermann Bayer, Ph.D.

Most people believe that mastery of a skill comes from practicing harder and longer. ‘10,000 hours of deliberate practice’ has achieved a level of imperative unwarranted by the actual evidence (Epstein, 2021). Yet countless learners, whether in business, the arts, or sport, hit a plateau they can’t break through. The problem isn’t effort. It’s that they’re missing a hidden progression that separates the true experts from the merely experienced.

For centuries, Japanese masters have understood this journey. It has three distinct phases, Shu, Ha, Ri, and each demands a different mindset and approach. Skip one, and your growth stalls. Get them right, and you move beyond imitation into competence and ultimately mastery. Unlike many Western theories of learning, it’s not a linear set of stages to be climbed like the rungs of a ladder: instead it’s a cycle, a spiral of increasing competence where the earliest phase is never forgotten.

Hermann Bayer’s Analysis of Shu Ha Ri in Karate-Do is one of the clearest and most extensive explanations of this progression I’ve encountered. While his examples come from Okinawan karate, his real subject is the universal process of moving from novice to master, potentially in any discipline.

Bayer brings to his writing both deep scholarship and decades of martial arts expertise. This shows, but the book remains reasonably accessible for general readers. He unpacks philosophical ideas without jargon, showing exactly how they play out in practice. One of his most important clarifications is effectively a major theme of the book: Shu Ha Ri is not an Okinawan tradition. Despite its frequent modern association with karate, Bayer shows that the concept comes from Japanese fine arts, especially from the tea ceremony, and only entered karate after karate’s fairly recent introduction to mainland Japan, in 1922. This detail is more than just historical trivia; it changes how you see the concept. Shu Ha Ri is not tied to a single fighting style, and certainly not to karate. It’s potentially a transferable blueprint for mastering any complex skill.

Although Shu Ha Ri has wide applicability and has been adopted in many different disciplines, Bayer does focus heavily on karate, and on its Okinawan origins. This is the author’s specialist field. He is, after all the author of the two-volume Analysis of Genuine Karate, which explores Okinawa as the cradle of true karate. So readers curious about Shu Ha Ri, but with limited interest in karate and Okinawan history may wish for more examples from other disciplines. But the underlying framework is so universal that the author’s examples still work. You don’t need to know a kata from a kumite to apply what you learn.

If you are a practitioner of Karate, I suspect after reading this book you’ll never see it in quite the same way. But what makes the concept of Shu Ha Ri valuable beyond martial arts is its potential application to any field where performance and creativity matter. For instance, writers might see how to move from imitating their influences to developing a unique voice. Leaders might understand when to enforce process and when to encourage innovation. Artists, athletes, and entrepreneurs might recognise the moment to step beyond rules without losing their foundation.

If you care about personal growth and continuous improvement, or want a proven roadmap to mastery, this book will give you both the theory and the practical insight to get there. By the time you finish it, you won’t just understand Shu Ha Ri, you’ll be inspired to integrate this learning philosophy into your own life. And in case you were tempted, you will never again confuse Shu Ha Ri with the historical traditions of Okinawan karate.

Details

Analysis of Shu Ha Ri in Karate-Do: When a Martial Art Becomes a Fine Art by Hermann Bayer, Ph.D. (June 2025, ISBN: 9781594399954)

Purchase directly from the publisher, YMAA.

Resources

I’m the author of Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters. It’s a short and accessible introduction to the concept, available now.

And if you enjoyed this review, you may like to subscribe to the weekly Writing Slowly email digest.

Open, free and poetic

The Web is 34 years old! Following on from Plenty of ways to write online, here are some really practical resources to help you create your own presence online :

Keeping the Web free, open and poetic.

Old hands will probably find a few useful tips here too.

Oh, and here’s another great big list of useful personal website stuff. Actually, I’m making a note of this for my own ‘going down the rabbit-hole’ purposes:

Resources List for the Personal Web

It’s also easier than ever to publish a book. Check out mine: Shu Ha Ri: The Japanese Way of Learning, for Artists and Fighters. And to stay connected, subscribe to the weekly email digest.

*Image source: Public Domain, Wikimedia.

#indieweb #webwriting #worldwideweb #blogging

Plenty of ways to write online

Writing online is more accessible than ever. We can maintain control of our own work by publishing on personal websites while also syndicating content to social media. And as I’ve discovered, it’s also easier than ever to publish a book..

Watch in awe as a fleeting thought becomes a lasting note

I describe in detail how I wrote a blog post and then repurposed it as a permanent note in my Zettelkasten collection. This is the opposite of my usual workflow, where I create publishable writing from my existing notes.

Hot takes on our future with AI

Here are eight ‘hot takes’ on the latest problems, questions and opportunities large language models are giving us. It was going to be just three, but the hot takes are coming thick and fast right now. These links are shared alongside my personal reflections on the impact and future of AI that does your writing for you while cosplaying as a human.

I designed a book in three and a half hours

A while ago, well, quite a long while ago, I designed a book in three and a half hours. Fun, yes, but it wasn’t very publishable.

Now, years later, I’ve finally got round to updating and redesigning the whole thing.

Yes, I’m still writing slowly but I’m excited to say it will soon be available for sale - so watch this space for more information.